But one of the Golden rules of programming is don't repeat yourself. So for example, if you have the block we defined earlier as a residual type to, you could have three of them in sequence in your architecture here. Let's call that Type 2, and perhaps there's a different block that might have two convolutional layers with a shortcut around them. So in the previous slides we had two dance blocks with a shortcut around them. Here aren't formal types, it's just slightly different blocks that you've defined. What I'm referring to is type one or type 2. Your architecture could define a dense layer than a type one residual, then three Type 2 residuals followed by another dense layer. There may be two different types and I'm going to call them residual type one which is colored in blue and residual type 2, which is in orange. So now consider a network architecture that uses a block like this.

RESIDUAL NETWORK CODE

So for example, if we were to define this in a code block or a function, we could encapsulate the entire flow a little bit like this.

RESIDUAL NETWORK PLUS

It's typically summed with the main path data so that the output of the block isn't just the modified data from passing through the dense layers, but the original plus the altar data.

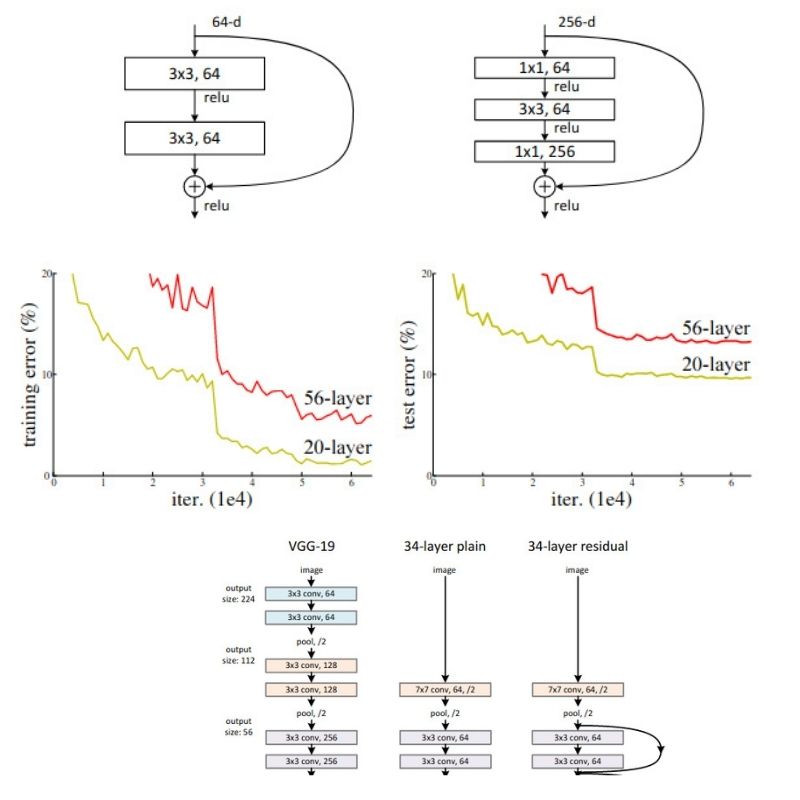

But a rez net also has a shortcut path where the data doesn't go through the same route, and it might flow through this block completely unaltered. Data flows through it as we're familiar with Innopath, I'm going to call the main path. So for example, this block might have two dense layers. Ultimately are as networks by having an alternative path from the input to the output, and it can be represented like this. Andrew explains the details in this video on in his convolutional Neural networks course, so you should really check it out.

This type of network architecture has been shown in research to help you increase the depth of your network while potentially not losing accuracy. The example I look at in this video is a simplified version of a residual network or Resnet for short.

RESIDUAL NETWORK SOFTWARE

This Specialization is for early and mid-career software and machine learning engineers with a foundational understanding of TensorFlow who are looking to expand their knowledge and skill set by learning advanced TensorFlow features to build powerful models. The DeepLearning.AI TensorFlow: Advanced Techniques Specialization introduces the features of TensorFlow that provide learners with more control over their model architecture and tools that help them create and train advanced ML models.

RESIDUAL NETWORK HOW TO

0 kommentar(er)

0 kommentar(er)